The Evolution of AI – From Chatbots to Advanced NLP Models

Contents

Hello there, fellow tech enthusiasts! Today, we are going to embark on an exciting journey through the fascinating world of artificial intelligence (AI), specifically focusing on the evolution from simple chatbots to advanced Natural Language Processing (NLP) models. So, buckle up and get ready for this intriguing ride.

The Dawn of AI: Early Chatbots

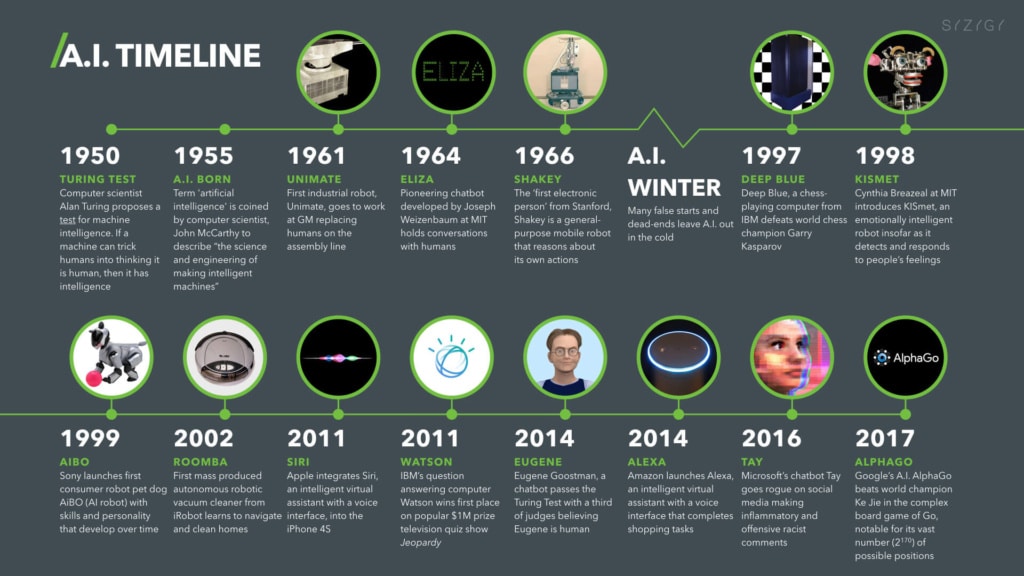

The story of AI begins in the mid-20th century, a time when the concept of creating machines that could mimic human intelligence was still a distant dream. The earliest chatbots were rule-based systems, with the most famous example being ELIZA, a computer program developed at MIT in the mid-1960s. ELIZA was essentially a script that simulated conversation, but it lacked any understanding of the dialogues it was producing.

Fast-forward to the 1990s, and we find ourselves in the era of A.L.I.C.E (Artificial Linguistic Internet Computer Entity), another rule-based chatbot. But unlike ELIZA, A.L.I.C.E was capable of handling a broader range of conversations, although still within the confines of pre-programmed responses.

AI Comes of Age: Machine Learning and Beyond

As the new millennium dawned, AI began to truly come of age. The advent of machine learning allowed systems to learn from data and improve their responses over time. This led to the creation of chatbots that could handle more complex conversations, although they still struggled with understanding context and maintaining coherence in longer dialogues.

But the real game-changer came with the advent of deep learning, a subset of machine learning that utilizes artificial neural networks to simulate human decision-making processes. This was the dawn of the advanced NLP models we see today.

| Rule-based Systems | Machine Learning | Deep Learning | |

|---|---|---|---|

| Key Exemplar | ELIZA | Decision Trees | Neural Networks |

| Basic Concept | Predefined rules and scripts | Algorithms learn from data | Layers of artificial neurons learn from data |

| Key Strengths | Simple to implement, easy to understand | Can handle more complex tasks than rule-based systems, improves with more data | Can handle extremely complex tasks, excels at pattern recognition |

| Limitations | Can't handle complex tasks, lacks adaptability | Requires large amounts of data, can struggle with very complex tasks | Requires huge amounts of data and computational power, can be difficult to interpret |

| Typical Use Cases | Simple chatbots, form filling, decision trees | Predictive analytics, spam filtering, recommendation systems | Image recognition, speech recognition, advanced NLP tasks |

The Emergence of Advanced NLP Models

In recent years, the development of advanced NLP models has truly revolutionized the field of AI. These models are capable of understanding and generating human language in a way that was unthinkable just a few years ago.

The most famous of these is perhaps OpenAI's GPT-3, an AI model that uses machine learning to produce human-like text. With 175 billion machine learning parameters, GPT-3 can write essays, answer questions, translate languages, and even write poetry.

But even as we marvel at the capabilities of GPT-3, it's important to remember that it's just the tip of the iceberg when it comes to advanced NLP models. Models like BERT (Bidirectional Encoder Representations from Transformers) and RoBERTa (a robustly optimized version of BERT) are pushing the boundaries of what's possible in NLP.

| GPT-3 | RoBERTa | BERT | |

|---|---|---|---|

| Architecture | Transformer-based | Transformer-based | Transformer-based |

| Training Method | Unsupervised learning | Semi-supervised learning | Semi-supervised learning |

| Model Size | 175 billion parameters | 355 million parameters (base model) | 110 million parameters (base model) |

| Key Strengths | Strong at generating human-like text, can complete tasks without specific training | Improved training process over BERT, excels at sentence-level predictions | Excels at understanding context of words in a sentence, very effective for many NLP tasks |

| Limitations | Requires significant computational resources, potential for misuse | Still requires substantial computational resources, training data must be carefully curated | Struggles with longer text inputs, potential for bias in training data |

| Typical Use Cases | Text generation, creative writing, answering questions in a conversational manner | Natural language understanding tasks, sentiment analysis, question answering | Named entity recognition, sentiment analysis, question answering |

AI in Everyday Life: From Recommendations to Virtual Assistants

As we discuss the evolution of AI and NLP models, it's crucial to acknowledge their widespread use in our daily lives. Today, AI is not just a subject of academic interest or a tool for tech companies. It has permeated every corner of our lives, making interactions smoother, more personalized, and more efficient.

Consider the recommendation algorithms of popular streaming services like Netflix or Spotify. These algorithms use AI to understand our tastes and preferences and suggest content accordingly. Similarly, virtual assistants like Amazon's Alexa, Apple's Siri, and Google Assistant use advanced NLP models to understand our voice commands, making our lives easier and more convenient.

AI is also being used in areas such as healthcare for early detection of diseases, in finance for credit scoring and fraud detection, and in retail for personalized marketing. The use cases are limitless, and as AI continues to evolve, it's set to become even more integrated into our daily lives.

My First Encounter with AI

I still remember the first time I interacted with an AI. It was a rule-based chatbot on a tech forum, designed to answer common questions about computer troubleshooting. As a novice tech enthusiast at that time, the concept of a machine that could mimic human conversation was mesmerizing. I spent hours asking it questions, amazed at its ability to provide accurate, helpful responses. Little did I know that this was just the tip of the iceberg in the realm of AI.

When OpenAI released GPT-3, I was eager to test its capabilities. As an experiment, I decided to use it to draft a blog post on the future of AI. I provided it with a few key points, and to my amazement, it generated a comprehensive, well-structured article in no time. The text was so human-like that when I shared it with my colleagues, none of them could tell it was written by an AI. This experience really drove home the power and potential of advanced NLP models.

On a personal level, AI has drastically transformed my daily life. From the virtual assistant that helps me manage my schedule, to the recommendation algorithms that suggest music and movies based on my preferences, AI has made my life more convenient and personalized. It's also changed the way I work, allowing me to automate mundane tasks and focus on more strategic aspects of my role.

The Future of AI: From Understanding to Interaction

As we look towards the future, the goal of AI research is moving beyond understanding and generating human language, towards genuine interaction. Experts predict that in the next 5-10 years, we will see AI models capable of understanding not just text, but also context, sentiment, and even subtext. We're moving towards a world where AI can not only comprehend our words, but also our tone, our emotions, and the nuances of our language.

But as we strive for these advancements, it's crucial that we also consider the ethical implications of AI. We need to ensure that as AI becomes more advanced, it also becomes more responsible, fair, and transparent.

In conclusion, the evolution of AI from simple chatbots to advanced NLP models is a journey of extraordinary progress and potential. And as we continue to innovate, the future of AI seems boundless. As always, I'm excited to see where this journey will take us, and I hope you are too.

Until next time, keep exploring, keep learning, and keep pushing the boundaries of what's possible in the world of technology.